Local Variables and Statistics

I thought I’d open up with some basic T-SQL performance tips, the sort of things I wish I knew when I was starting my journey as a software engineer. Today’s post is about “Local Variables” and how the query optimiser uses them.

First, let’s create some sample data to play with.

USE tempdb;

SET NOCOUNT ON;

IF object_id('dbo.testEnvironment') IS NOT NULL

BEGIN;

DROP TABLE dbo.testEnvironment;

END;

--1,000,000 Pseudo-Random rows of data

SELECT TOP 1000000 IDENTITY(INT,1,1) AS ID,

RAND(CHECKSUM(NEWID())) * 30000 /*(Number of days in range)*/ + CAST('1945' AS DATETIME) /*(Start date, e.g. '1945-01-01 00:00:00*/ AS randomDateTime,

DATEADD(DAY,((ABS(CHECKSUM(NEWID())) % 366 /*(Number of days in range)*/) + 1),CAST('1945' AS DATE) /*(Start date, e.g.'1945-01-01*/) AS randomDate,

ABS(CHECKSUM(NEWID())) AS randomBigInt,

(ABS(CHECKSUM(NEWID())) % 100) + 1 AS randomSmallInt,

RAND(CHECKSUM(NEWID())) * 100 AS randomSmallDec,

RAND(CHECKSUM(NEWID())) AS randomTinyDec,

RAND(CHECKSUM(NEWID())) * 100000 AS randomBigDec,

CONVERT(VARCHAR(6),CONVERT(MONEY,RAND(CHECKSUM(NEWID())) * 100),0) AS randomMoney

INTO dbo.testEnvironment

FROM master.dbo.syscolumns sc1

CROSS JOIN master.dbo.syscolumns sc2

CROSS JOIN master.dbo.syscolumns sc3;

INSERT INTO dbo.testEnvironment(randomDateTime,randomDate)

VALUES('19550101','19550101');

CREATE UNIQUE CLUSTERED INDEX PK_testEnvironment ON dbo.testEnvironment(ID);

CREATE INDEX IX_dateTime_testEnvironment ON dbo.testEnvironment(randomDateTime);

GO

Now, we’ll turn on statistics so that we can take a look at what is going on.

SET STATISTICS IO ON;

GO

If we fire off a SELECT statement now against this table where we look for rows with an ID greater than 55258, will using a variable change the execution of the query?

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

SET STATISTICS IO ON;

SET NOCOUNT ON;

GO

PRINT 'Hardcoded';

SELECT COUNT(*)

FROM dbo.testEnvironment

WHERE ID > 55258;

GO

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

GO

PRINT 'Variable';

DECLARE @INT INT = 55258;

SELECT COUNT(*)

FROM dbo.testEnvironment

WHERE ID > @INT;

GO

Under results, we get the same returned row as expected.

Under messages, the IO statistics: -

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

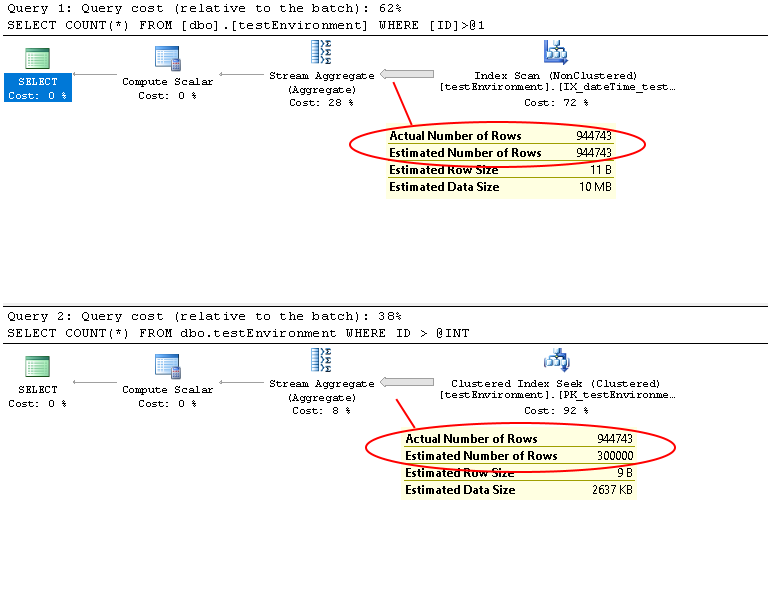

And finally, the execution plans: -

The hardcoded value claims to be taking up 62% of the cost of the batch whilst the variable claims to only be taking up 38% of the batch. I’ve circled the estimated number of rows to be returned by each query. As you can see, the hardcoded value has estimated that we’re going to be returning 944,743 rows whilst the variable only estimates 300,000 rows returned. A lot of developers that are less familiar with SQL Server assume that the query with the highest cost relative to the batch will be the slowest, so let’s take a look at some query durations: -

SET STATISTICS IO ON;

SET NOCOUNT ON;

GO

DECLARE @LOOP INT = 0;

WHILE @LOOP <= 5

BEGIN

RAISERROR('============',0,1) WITH NOWAIT;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

IF OBJECT_ID('tempdb..#holder') IS NOT NULL

BEGIN

DROP TABLE [#holder];

END;

PRINT 'Hardcoded';

DECLARE @Duration CHAR(12),

@StartTime DATETIME;

SET @StartTime = GETUTCDATE();

SELECT COUNT(*) AS A

INTO [#holder]

FROM [dbo].[testEnvironment]

WHERE [ID] > 55258;

SELECT @Duration = CONVERT(CHAR(12), GETUTCDATE() - @StartTime, 114);

RAISERROR('Hardcoded Duration: %s',0,1,@Duration) WITH NOWAIT;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

IF OBJECT_ID('tempdb..#holder2') IS NOT NULL

BEGIN

DROP TABLE [#holder2];

END;

PRINT 'Variable';

DECLARE @INT INT = 55258;

SET @StartTime = GETUTCDATE();

SELECT COUNT(*) AS A

INTO [#holder2]

FROM [dbo].[testEnvironment]

WHERE [ID] > @INT;

SELECT @Duration = CONVERT(CHAR(12), GETUTCDATE() - @StartTime, 114);

RAISERROR('Variable Duration: %s',0,1,@Duration) WITH NOWAIT;

DROP TABLE [#holder];

DROP TABLE [#holder2];

SET @LOOP = @LOOP + 1;

END;

What the above is doing is running the queries in a loop, calculating the time it took to get the count and insert in to a holder table. Here’s the results from the messages tab: -

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:420

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:270

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:427

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:310

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:407

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:227

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:440

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:200

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:433

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:333

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:437

Variable

Table 'testEnvironment'. Scan count 1, logical reads 7767, physical reads 1, read-ahead reads 7762, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:01:120

To make that a bit easier to read, here it is again in a table: -

| Loop Number | Hardcode Value Query Duration | Variable Query Duration |

|---|---|---|

| 0 | 00:00:00:420 | 00:00:01:270 |

| 1 | 00:00:00:427 | 00:00:01:310 |

| 2 | 00:00:00:407 | 00:00:01:227 |

| 3 | 00:00:00:440 | 00:00:01:200 |

| 4 | 00:00:00:433 | 00:00:01:333 |

| 5 | 00:00:00:437 | 00:00:01:120 |

So the variable query, despite costing 38% of the batch, took roughly 3 times longer to execute than the hardcoded query on every execution. We can fix this issue with variables by forcing the SQL Server query optimiser to always compile a new execution plan rather than to use any cached plans, however this has the downside of meaning that the SQL Server query optimiser will always have to generate a new plan, even if the same query with the same parameters is executed multiple times during a day.

SET STATISTICS IO ON;

SET NOCOUNT ON;

GO

DECLARE @LOOP INT = 0;

WHILE @LOOP <= 5

BEGIN

RAISERROR('============',0,1) WITH NOWAIT;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

IF OBJECT_ID('tempdb..#holder') IS NOT NULL

BEGIN

DROP TABLE [#holder];

END;

PRINT 'Hardcoded';

DECLARE @Duration CHAR(12),

@StartTime DATETIME;

SET @StartTime = GETUTCDATE();

SELECT COUNT(*) AS A

INTO [#holder]

FROM [dbo].[testEnvironment]

WHERE [ID] > 55258;

SELECT @Duration = CONVERT(CHAR(12), GETUTCDATE() - @StartTime, 114);

RAISERROR('Hardcoded Duration: %s',0,1,@Duration) WITH NOWAIT;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

IF OBJECT_ID('tempdb..#holder2') IS NOT NULL

BEGIN

DROP TABLE [#holder2];

END;

PRINT 'Variable';

DECLARE @INT INT = 55258;

SET @StartTime = GETUTCDATE();

SELECT COUNT(*) AS A

INTO [#holder2]

FROM [dbo].[testEnvironment]

WHERE [ID] > @INT

OPTION(RECOMPILE);

SELECT @Duration = CONVERT(CHAR(12), GETUTCDATE() - @StartTime, 114);

RAISERROR('Variable Duration: %s',0,1,@Duration) WITH NOWAIT;

DROP TABLE [#holder];

DROP TABLE [#holder2];

SET @LOOP = @LOOP + 1;

END;

All I’ve done there is add “OPTION(RECOMPILE)” to the end of the query using a variable, which we can see has the affect of making the two queries identical (differences in the execution times are not statistically relevant).

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:423

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:157

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:130

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:107

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:227

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:187

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:133

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:117

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:107

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:213

============

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Hardcoded Duration: 00:00:00:177

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2241, physical reads 3, read-ahead reads 2279, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable Duration: 00:00:00:167

To make that a bit easier to read, here it is again in a table: -

| Loop Number | Hardcode Value Query Duration | Variable Query Duration |

|---|---|---|

| 0 | 00:00:00:423 | 00:00:00:157 |

| 1 | 00:00:00:130 | 00:00:00:107 |

| 2 | 00:00:00:227 | 00:00:00:187 |

| 3 | 00:00:00:133 | 00:00:00:117 |

| 4 | 00:00:00:107 | 00:00:00:213 |

| 5 | 00:00:00:177 | 00:00:00:167 |

Let’s stick another million rows of data into our sample data.

INSERT INTO dbo.testEnvironment (randomDateTime, randomDate, randomBigInt, randomSmallInt, randomSmallDec, randomTinyDec, randomBigDec, randomMoney)

SELECT TOP 1000000 '9999-12-31' AS randomDateTime,

DATEADD(DAY,((ABS(CHECKSUM(NEWID())) % 366 /*(Number of days in range)*/) + 1),CAST('1945' AS DATE) /*(Start date, e.g.'1945-01-01*/) AS randomDate,

ABS(CHECKSUM(NEWID())) AS randomBigInt,

(ABS(CHECKSUM(NEWID())) % 100) + 1 AS randomSmallInt,

RAND(CHECKSUM(NEWID())) * 100 AS randomSmallDec,

RAND(CHECKSUM(NEWID())) AS randomTinyDec,

RAND(CHECKSUM(NEWID())) * 100000 AS randomBigDec,

CONVERT(VARCHAR(6),CONVERT(MONEY,RAND(CHECKSUM(NEWID())) * 100),0) AS randomMoney

FROM master.dbo.syscolumns sc1

CROSS JOIN master.dbo.syscolumns sc2

CROSS JOIN master.dbo.syscolumns sc3;

GO

Take a quick look at the statistics and we’ll see that SQL server is missing the extra million rows: -

DBCC SHOW_STATISTICS('dbo.testEnvironment','PK_testEnvironment');

SELECT COUNT(*) AS [RowCount] FROM dbo.testEnvironment;

GO

You’ll see rows in the statistics is 1,000,001 whilst the count returns the correct 2,000,001 rows. Let’s update our statistics: -

UPDATE STATISTICS dbo.testEnvironment WITH FULLSCAN;

DBCC SHOW_STATISTICS('dbo.testEnvironment','PK_testEnvironment');

GO

Now we can run a new query against the randomDateTime column: -

SET STATISTICS IO ON;

SET NOCOUNT ON;

GO

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

DECLARE @HOLDER INT;

PRINT 'Hardcoded';

SELECT @HOLDER = ID

FROM dbo.testEnvironment

WHERE randomDateTime = '99991231';

GO

CHECKPOINT;

DBCC DROPCLEANBUFFERS WITH NO_INFOMSGS;

DECLARE @HOLDER INT;

PRINT 'Variable';

DECLARE @DATETIME DATETIME = '99991231';

SELECT @HOLDER = ID

FROM dbo.testEnvironment

WHERE randomDateTime = @DATETIME;

GO

SET STATISTICS IO OFF;

SET NOCOUNT OFF;

GO

Hardcoded

Table 'testEnvironment'. Scan count 1, logical reads 2244, physical reads 3, read-ahead reads 2243, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Variable

Table 'testEnvironment'. Scan count 1, logical reads 2244, physical reads 5, read-ahead reads 2227, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

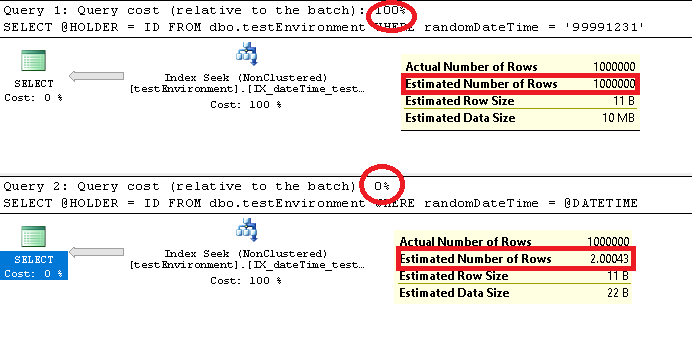

So they’ve both seeked on the same index and both have produced the same results however one query has a few more physical reads and a few less read-ahead reads. Firstly, let’s look at how the queries have worked out their estimates. Going back to the statistics: -

DBCC SHOW_STATISTICS('dbo.testEnvironment','IX_dateTime_testEnvironment');

GO

Output 1

| Name | Updated | Rows | Rows Sampled | Steps | Density | Average key length | String Index | Filter Expression | Unfiltered Rows | Persisted Sample Percent |

|---|---|---|---|---|---|---|---|---|---|---|

| IX_dateTime_testEnvironment | Jul 20 2019 3:41PM | 2000001 | 2000001 | 8 | 0.999788 | 12 | NO | NULL | 2000001 | 0 |

Output 2

| All density | Average Length | Columns |

|---|---|---|

| 1.000215E-06 | 8 | randomDateTime |

| 4.999998E-07 | 12 | randomDateTime, ID |

Output 3

| RANGE_HI_KEY | RANGE_ROWS | EQ_ROWS | DISTINCT_RANGE_ROWS | AVG_RANGE_ROWS |

|---|---|---|---|---|

| 1945-01-01 00:18:23.193 | 0 | 1 | 0 | 1 |

| 1945-08-23 01:25:49.040 | 7883 | 2 | 7882 | 1.000127 |

| 1946-03-17 17:03:08.953 | 6820 | 2 | 6818 | 1.000293 |

| 1946-09-12 13:31:09.950 | 6104 | 2 | 6104 | 1 |

| 1947-02-24 17:03:01.117 | 5527 | 2 | 5526 | 1.000181 |

| 1948-01-25 06:32:21.350 | 11185 | 2 | 11185 | 1 |

| 2027-02-19 23:26:20.133 | 962470 | 1 | 962262 | 1.000216 |

| 9999-12-31 00:00:00.000 | 0 | 1000000 | 0 | 1 |

Starting with the query variable, the query optimiser takes the all density value for the index (1.000215E-06) then multiplies this by the number of rows in the index (2,000,001). You can do this in T-SQL by running: -

SELECT 0.000001000215 * 2000001;

Which returns “2.000431000215”. If we look back at the estimated rows from the execution plan, you’ll see it was “2.00043”.

For the hardcoded value, we instead look at the third table from the statistics. If we were to query the table with the key “1945-01-01 00:18:23.193”, e.g.

SELECT COUNT(*)

FROM dbo.testEnvironment

WHERE randomDateTime = '1945-01-01 00:18:23.193';

The number of rows returned will be “1”, which we can see in the statistics table by looking at the EQ_ROWS column for that key. As we used the key “99991231”, which SQL Server will have converted to “9999-12-31 00:00:00.000”, the query optimiser can look at the statistics table and estimate that the number of rows returned will be the same at the EQ_ROWS column for that key whch is 1,000,000.

The RANGE_ROWS column in that table tells us how many rows there are between the current RANGE_HI_KEY and the previous RANGE_HI_KEY. So between “1945-01-01 00:18:23.193” and “1945-08-23 01:25:49.040”, we have 7,883. This can be checked with: -

SELECT COUNT(*)

FROM [dbo].[testEnvironment]

WHERE [randomDateTime] > '1945-01-01 00:18:23.193'

AND [randomDateTime] < '1945-08-23 01:25:49.040';

Which of course, returns 7,883.

The DISTINCT_RANGE_ROWS columns tells us how many distinct rows there are between the current RANGE_HI_KEY and the previous RANGE_HI_KEY. So between “1945-01-01 00:18:23.193” and “1945-08-23 01:25:49.040”, we have 7,882. This can be checked with: -

SELECT COUNT(DISTINCT([randomDateTime]))

FROM [dbo].[testEnvironment]

WHERE [randomDateTime] > '1945-01-01 00:18:23.193'

AND [randomDateTime] < '1945-08-23 01:25:49.040';

Which returns 7,882.

Finally, we have AVG_RANGE_ROWS which tells us the number of rows with duplicate column values, excluding the upper bound. This can be worked out by dividing RANGE_ROWS by DISTINCT_RANGE_ROWS (when DISTINCT_RANGE_ROWS is greater than 0).

Share on: